Seedance 1.0 Lite by ByteDance: What You Need to Know

ByteDance recently launched Seedance 1.0 Lite—and it’s causing a stir. New video models drop all the time. Most vanish in a day. But this one? It’s quietly doing a lot of things right, and for a fraction of the cost of some big-name competitors. Here’s all you need to know about it!

Want to skip right to the good stuff? ByteDance Seedance 1.0 Lite is live in our Video Generator - try it right now!

What is ByteDance Seedance?

Seedance 1.0 Lite is a diffusion-based Text to Video and Image to Video model developed by ByteDance—the same folks behind TikTok, CapCut, and the recent Seedream 3.0 image model (which you can also try in our Image Generator, by the way).

It’s designed for short-form video generation, running 5 or 10 seconds per clip, with outputs up to 720p resolution. You can generate from just a text prompt, or pair a prompt with an image to guide the first frame. The result? Surprisingly stable, visually rich video with a decent sense of structure—and all of it runs smoothly in the browser through our interface.

The "Lite" in the name doesn’t mean stripped-down junk. It refers to the model’s optimized inference speed and lower compute cost. Which, when you’re testing a dozen ideas in a row, is exactly what you want.

ByteDance’s AI Ambitions Are Very Real

While OpenAI and Google tend to dominate the headlines, ByteDance has been quietly building a serious foundation in generative AI. From Seedream 3.0 (its ultra-detailed Text to Image model) to multimodal LLMs like Doubao, the company’s model roadmap is increasingly impressive—and increasingly public.

Seedance 1.0 was trained on a heavily curated video dataset, with strong caption alignment and smart data balancing. It uses a variational autoencoder + transformer-based diffusion system and supports both text-to-video and image-to-video in a unified architecture. That means it doesn’t treat each task like a bolt-on—it actually learns them jointly. You can feel that in the consistency of the results.

What Seedance Does Well

Let’s be specific. Here’s where this model genuinely shines:

✅ Solid structure and motion

Characters don’t melt halfway through a scene. Motion is coherent and deliberate. There’s an unusually good sense of spatiotemporal fluidity—especially considering the speed.

✅ Works across multiple styles

Stylized prompts (anime, claymation, cyberpunk, oil painting) actually land, without having to fight the model. You can create realistic shots or dreamlike animations, and it holds up on both.

✅ 10-second clips are no problem

Many other wallet-friendly models cap you at 5 seconds. Seedance comfortably generates up to 10 seconds of footage, making it easier to express complete moments or short narrative arcs.

✅ Fast and affordable

Compared to heavyweights like Google Veo or top Kling variants, Seedance is cheaper and easier to try. That makes it ideal for experimentation, iteration, or just having fun.

Audio isn’t part of the package

Unlike Google Veo 3, which can generate synchronized dialogue and ambient soundtracks, Seedance doesn’t touch audio. You’ll need to add sound separately.

How to prompt Seedance like a pro

Seedance was trained on dense video captions—not short vibes. It responds best to prompts that are specific, visual, and action-focused. Here’s how to approach it:

Start with one subject doing one thing in one place

Use clear verbs (“running,” “spinning,” “shouting,” not “cool vibes”)

Include visual or style cues last (“foggy forest,” “neon lights,” “claymation look”)

Avoid conflicting directions—don’t stack fast and slow or ask for “chaos and symmetry”

For Image to Video, use your image as a visual anchor. Then describe what moves next. For example:

“the cat’s ears twitch as it blinks and stretches”

“the astronaut slowly turns their head toward the camera”

“the leaves behind the subject begin to blow sideways in strong wind”.

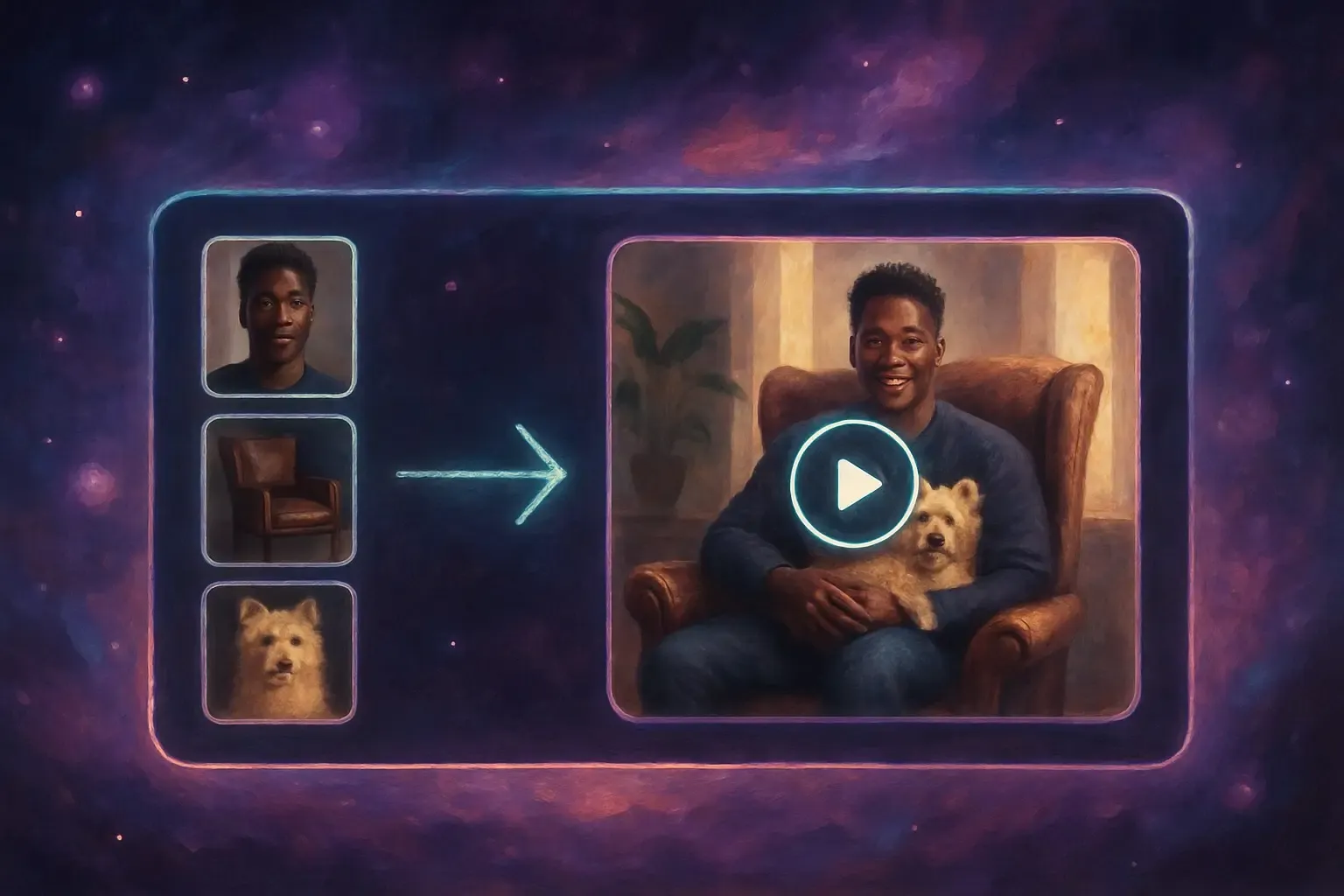

Take a look at how we transformed this image:

.png)

Into this unique clip:

A welcome addition to the AI video ecosystem

Seedance 1.0 Lite isn’t just another flashy release. It’s a well-built, reasonably priced, and technically thoughtful model that pushes the quality bar forward without pretending to be the final word on AI video. And that’s refreshing.

Even more important—it raises the level of competition. With ByteDance stepping in, it nudges other players to stay sharp and keep improving, especially when it comes to model affordability.

If you're curious to try it, head to our Video Generator and give it a spin. We’re already seeing some wildly creative results, and yours could be next.