Guide to IP Adapters

Elevate your AI art game with IP Adapters. Read our guide and learn to inject your images with specific visual elements for stunning results.

What are IP Adapters?

IP Adapters extract specific visual elements from reference images and apply them to new AI-generated artwork. They are available for getimg.ai models in Image Generator’s Essential mode and for all Stable Diffusion XL-based models in the SD mode. Models based on SDXL are marked with an “XL” tag in the model selection menu.

These adapters analyze a reference image you provide, extracting specific visual characteristics depending on the adapter type. You can select from three IP Adapter types: Style, Content, and Character. The AI then uses the extracted information to guide the generation of your new image.

Types of IP Adapters

Style

The Style IP Adapter extracts color values, lighting, and overall artistic style from your reference image. It's great for capturing an image's mood and atmosphere without necessarily replicating its content.

That makes it an invaluable tool for artists and marketers alike. Simple and effective ways of boosting your marketing workflow with the Style feature include:

- applying your brand’s color scheme to stock photos,

- generating featured images for your blog posts in a consistent but unique abstract style,

- designing email headers with a cohesive neon aesthetic.

Reference image

"beach"

"city"

"forest"

Content

The Content IP Adapter captures nearly everything visible in the reference image, including architecture, characters, objects, and scenery. That’s a simple solution for recreating specific elements or scenes in a new context.

This feature can come in handy on many occasions. E.g., if you’re a game designer, you could use it to:

- create multiple versions of a sci-fi weapon design,

- produce different iterations of a boss character,

- develop diverse biome concepts based on a single landscape.

Reference image

"winter"

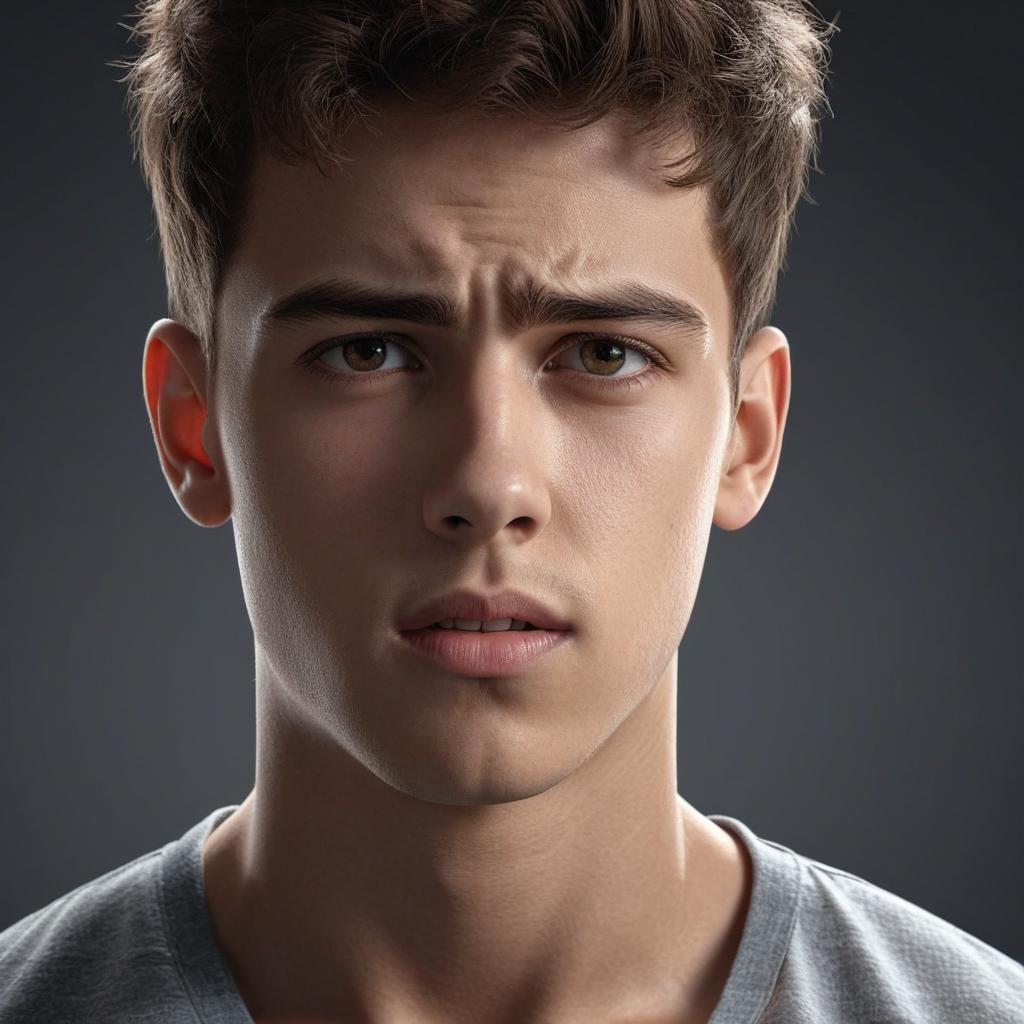

Character

The Character IP Adapter specifically seeks out visual character traits, focusing on facial features. This way, you can easily maintain consistent character appearances across different images.

It’s meant for use with human characters; pets, fantastical creatures, and similar use cases are not recommended.

For example, if you’re creating a visual novel or a comic book, it allows you to:

- reimagine your character in different art styles,

- create age progressions of a character throughout a story,

- develop a character's look across different time periods.

Reference image

"ancient Roman senator"

"XVI century aristocrat"

"WW1 era soldier"

Using IP Adapters

Step 1. Select a model and write a prompt

Go to the Image Generator, and choose the style or model you'd like to use. Remember, IP Adapters work with getimg.ai models in the Essential mode and all Stable Diffusion XL-based models (marked with an “XL” tag) in the SD mode.

For optimal results, select a model that aligns with your desired outcome. For instance, if you're using the Style IP Adapter to replicate the style of your favorite painter, the “Artistic” getimg.ai model in the Essential mode could be a good choice.

Then, write a prompt describing the image you want to create. For example, if you're using a Character IP Adapter with a reference image of a person, your prompt might be “fantasy warrior in battle armor”.

Step 2. Upload your reference image

In the Image Reference section of the AI Generator, upload the image you want to use as a reference. You can add an image from:

- your device: by dragging the file from its location into the designated area or by clicking the upload button to select a file manually,

- Image Generator: by dragging an image from the generation results or by hovering over it and clicking the “Use as image reference” icon.

Unlike with Image to Image and ControlNet features, with IP Adapters, you don't need to match the aspect ratio of your reference image to your desired output. IP Adapters can effectively extract the necessary visual values regardless of the image resolution.

Step 3. Choose IP Adapter type and adjust the reference strength

Select the type of IP Adapter you want to use: Style, Content, or Character. Your choice should depend on the elements you want to extract from your reference image and apply to your new creation.

Adjust the reference strength with the slider to determine how strongly the extracted elements will influence your final image:

- a lower strength means a weaker influence from the reference image,

- a higher strength means a stronger influence from the reference image.

Experiment with different strength levels to find the right balance for your project. To avoid abstract results, it’s usually best to keep it below 100.

Step 4. Generate your images

Choose the number of images you want to generate (from one to ten) and click the "Create images" button to start the generation process.

Don't hesitate to experiment with different prompts, reference images, adapter types, and strength settings to discover the full potential of IP Adapters.

Combine Image to Image, different IP Adapters, and ControlNet models with Multiple Image References to unlock even more creative possibilities. For example, you could guide image generation with Character and Style references simultaneously.